Classical AI in JavaScript

In recent years, Aritificial Intelligence has recieved a lot of attention thanks to the re-birth of Neural Networks, and the advent of technologies like Siri,alex and self-driving cars. but the idea of AI is not new, infact it all started in 1960s, when a guy name Jhon McCarthy published his first paper on A.I called Programs with Common Sense. after him there were sevral people from really diverse backgrounds which influences the methodologies we use today….

side note:if you want to dive deep into the beginnings of AI, there is a great series of interviews conducted by MIT which you might find intresting… https://techtv.mit.edu/videos/10268-the-thinking-machine-1961—mit-centennial-film

although it might be tempting to think that classical AI is useless now, but it is still being used heavily… one of the most intresting field that utilize these algorithms is the games Industry and in this post we are going to be learning about some of these algorithms by creating our very own self-driving car(…..kind of). but before that, we need to understand what it really means to be intelligent, afterall our goal is to create an intelligent algorithm which can drive our car.

one of the most prominent trait of being intelligent, is being able to slove problems correctly and efficiently. and like most of the problem solving tasks in real life, we tend to search for best possible route to get the best solution. so this means that most of the problems can be solved by “searching for the optimum solution”, and that is exactly what we are trying to achieve in the field of A.I.

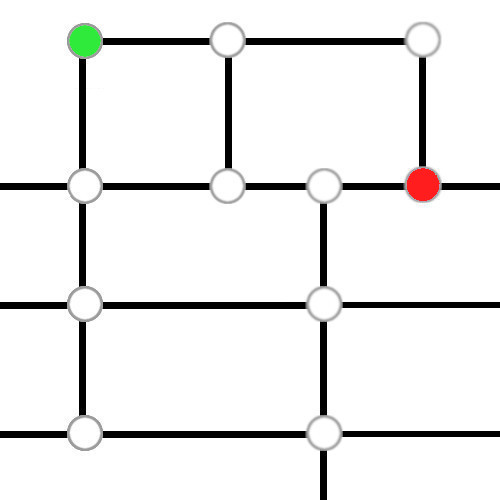

let’s take a simple problem (see, L-fig (1.2)), suppose you have an appointment at a company and you are damn late, now, your objective is to reach there as fast as possible. so go ahead take the controller and see if you could find the fastest way to reach your destination….

L-fig 1.2: use W,A,S,D keys to move the car and reach the destination (red marker)

in the above exercise, we are able to solve this problem pretty easily, because….. we are humans,but if we want our computer to solve this problem we first need to explain it, in a language that they can understand. which brings us to our next topic, “spaces”…

State Space and Search Space

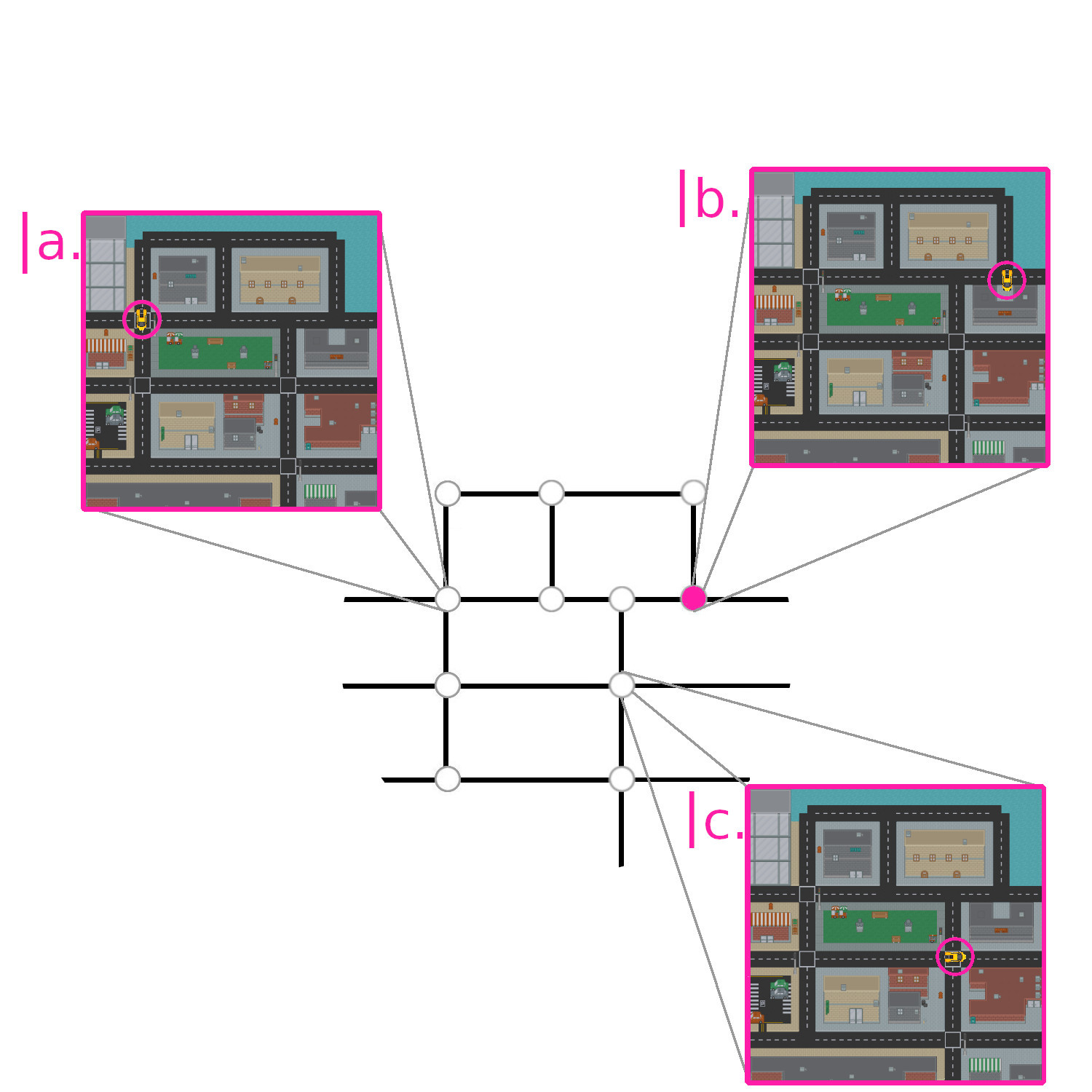

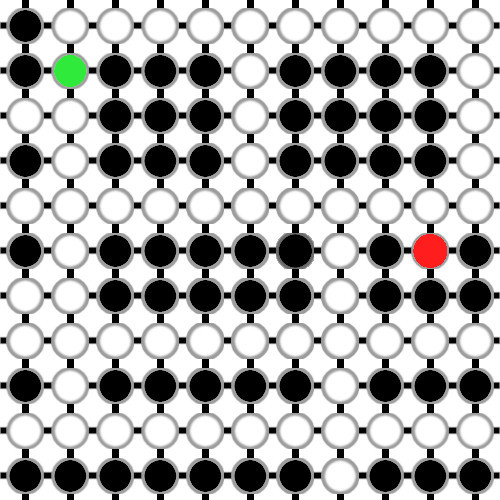

In classical A.I litrature, there are 2 types of spaces, state space and search space, state space is what you see i.e, the physical configuration of our problem.(like the scene you see in L-fig-1.2 or driving a physical car and reaching your office on time (by the way, next time, set alarm! ) whereas, search space is the abstract configuration usually represented by tree or a graph data-structure , which is necessary because search space helps us translate our problem-environment(city-map in our case) in the language that computers can understand and potentially use it to solve the problem. it can be of many form,for e.g the search space of L-fig (1.2) could be of the form fig-1.3(a),fig-1.3(b) or even fig-1.3(c)(which is a tree). inwhich each node represent a specific state in our state space and each edge represent the path from one state to another(see fig-1.4).

fig-1.3(a)(left),fig1.3(b)(center) and fig 1.3(c)(right): where, green node represent start state and red node represent the goal state (we will learn more about this later in this blog post.)

fig 1.4: as mentioned earlier, each node represent a perticular state in the state space, here in this figure, we expand some of these nodes in order to show what state these nodes represents. namely, state(b) represent the goal state of our problem and (a) and (c) represent some other states in our state space

Now, these searches can broadly be categorised into 2 types, informed search and uninformed search:-

Informed search: In informed search, we have some information about our environment like for example in L-fig-1.2 we can see where our goal is! and we know how to reach there… which shows that, we have full information about our environment, which is the reason why we did’nt need to drive through every single road in order to find our goal because we already knew which road/path leads to our destination.

Uninformed search : on the other hand in un-informed search, we don’t know anything about our environment which means that we have to search the entire space(roads/streets in our case) one by one in order to find our goal. In the next section we are first going to take a deep dive into uninformed search and its algorithm and after that we will take a look at some of the informed search stratigy.

Uninformed Search Strategies

As mentioned above, in uninfomed search, we have little to no information about our environment and it turns out that, in most of the real life problems we don’t know much about our environment either. which is the reason why these algorithms are much more common in daily CS works then the informed ones.In our case, we can think of uninformed search as if we were blindly searching the entire space in hopes of finding our destination as illustrated in L-fig 1.5 :-

now that we know what it feels like to blindly searching the space, lets take a look at how our computers search in this environment by looking at some of these algorithms…

Depth First Search

<img class=”img2Center”src=”/assets/img/posts_imgs/classical-ai/body/dfs.gif”/>

Now, suppose that our search space is a rectangular shaped coloney/street/block and we have to meet all our neighbours ,so instead of first visiting our adjecent-horizontal neighbours (the ones that are on the left and right of us) we first went-a-head and visit the neighbours that are “in-front” of us and we keep doing that untill we reach the end of our street and then we visit the horizontal neigbours(see fig.1.7), in-other words, we are searching depth-wise instead of breadth wise.

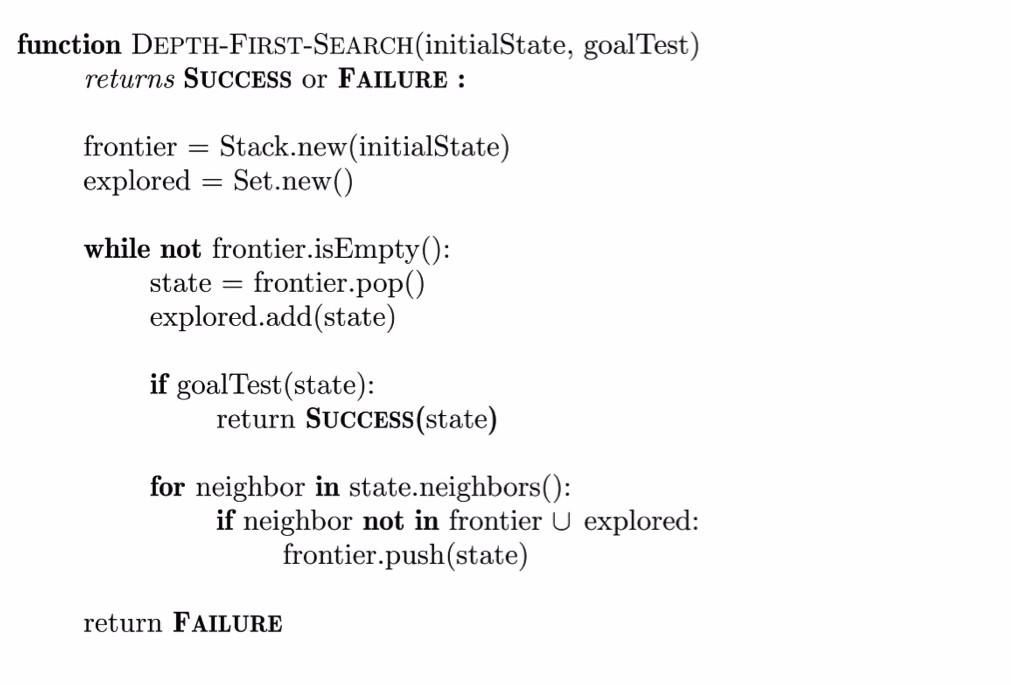

More-Techinically, in DFS we take the neighbours/childs of the current node and put it inside a stack(LIFO) and then take the last added node inside our stack and make that our current node, we repeat this process untill we reached our goal state or untill we exaust our search space.this method leads to a special behivour of searching depth-wise (which you can see on your right).

for javascript implimentation, click here.

fig 1.6: dfs in action, see how it works under the hood .

Breadth First Search

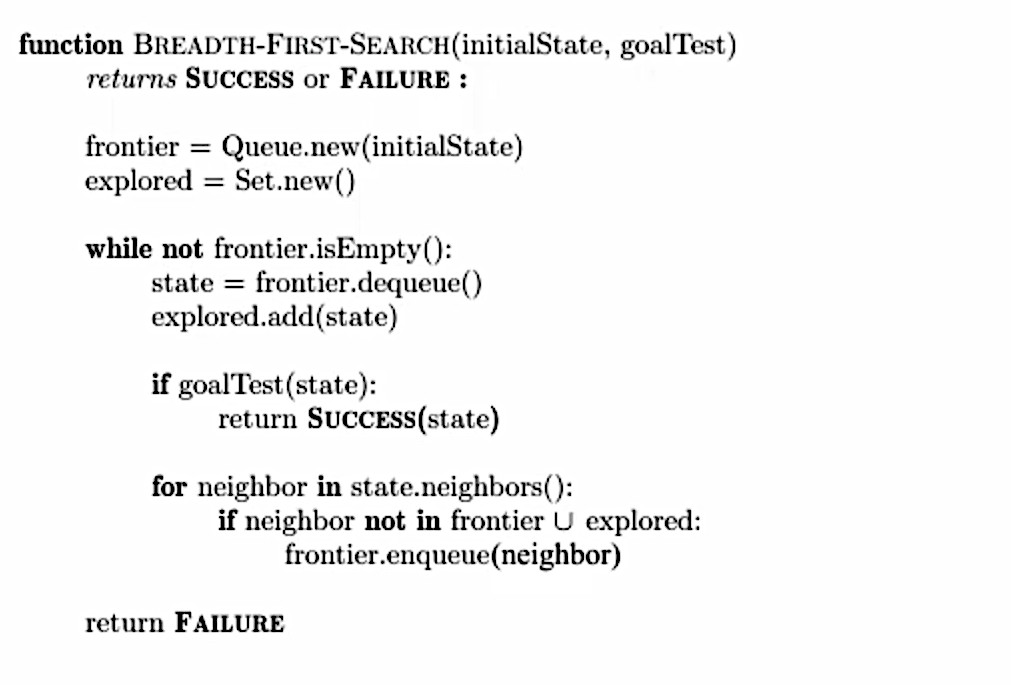

BFS is really similar to DFS but here, instead of using Stack, we use Queue(FIFO) which leads to another special behivour of searching breadth-wise (hence, Breadth-First Search), and just like any algorithm, its better to see it in action(see, right).

for javascript implimentation, click here.

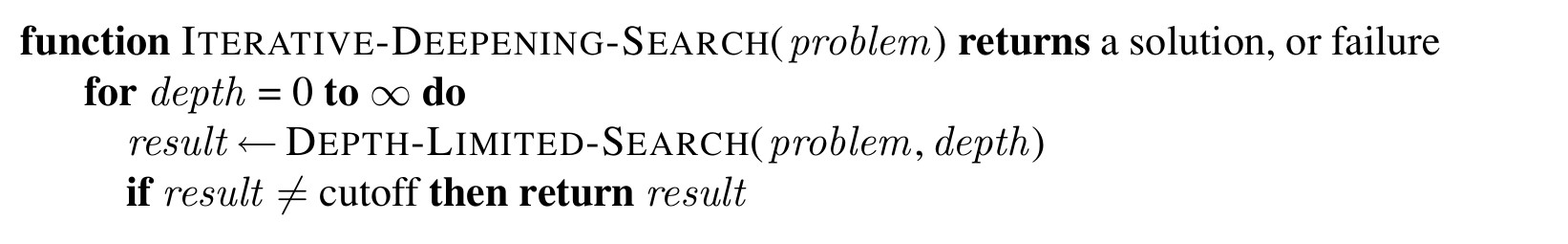

IDDFS

Here, We limit the depth of our dfs search using a threshold and then iteratively increase this threshold to our liking, the reason why we do this is because, suppose our search space is infinit in which case the depth is infinit as well, which suggest the fact that if our goal state is in the brach horizontally adjecent to the current one then we will never be able to find it(see, right) but by using this iterative-deepning method we don’t have to keep searching to the deepest end of the current branch, we can just skip to the next branch once we hit the threshold(see fig.1.8)

fig.1.7: visualization of iddfs

Now suppose, we have some information about our search space, just like in (L-fig 1.2) i.e, we know where are goal is, which means that we no longer have to search the entire space, instead we feed the information we have about our goal into our algorithm and we are done! , in the next section we are going to take a look at some of these algorithms.

Informed Search Strategies

the most common informed search strategy is known as best-first search, here, instead of treating all the nodes as equal and expand them one by one(as we did in uninformed search algorithms above), we tend to prioritise nodes based on something called an evaluation function (\(f(n)\)). for example, in our problem, we have to reach our destination as fast as possible, in other words, we need to find the “shortest path” from our home to our office and for that,we can search the space by choosing the roads(nodes) that are closer to our destination using this evaluation function. which inturn, leads to finding our shortest path!

Greedy best-first search

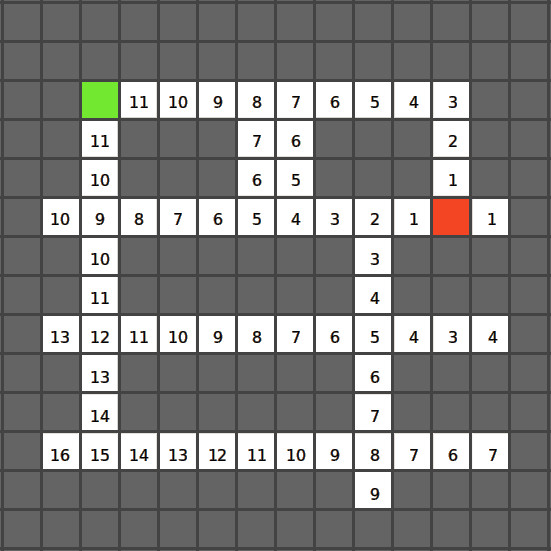

In Greedy best-first search we choose the evaluation function to just be the distance( euclideon or manhatten ) between a perticular node “n” to our goal node “w” (which is also known as heuristic function \(h(x)\) ) and prioritise the ones that evaluates least value(i.e, neighbours that are closes to our goal). and if we examine the outputs of our evaluation function on our problem(see, fig-1.8), we can see that the nodes that are closer to our goal node has less value then the ones that are far… you can also observe that alot of nodes has same value which suggest the fact that there are more then one best path to reach our goalstate.

[f(x) = h(x)]

fig 1.8:Weight map of Greedy Best-first Search

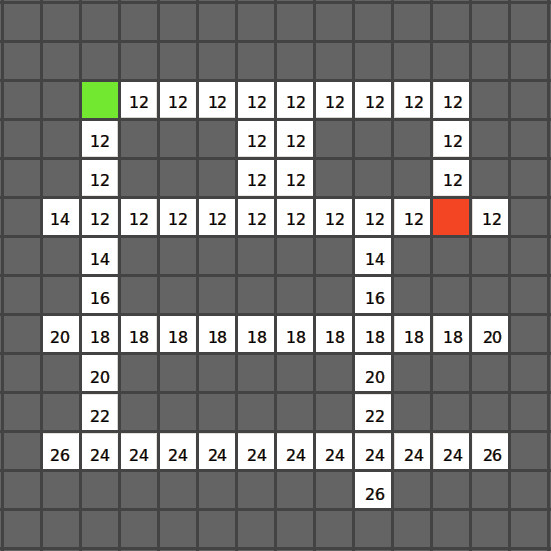

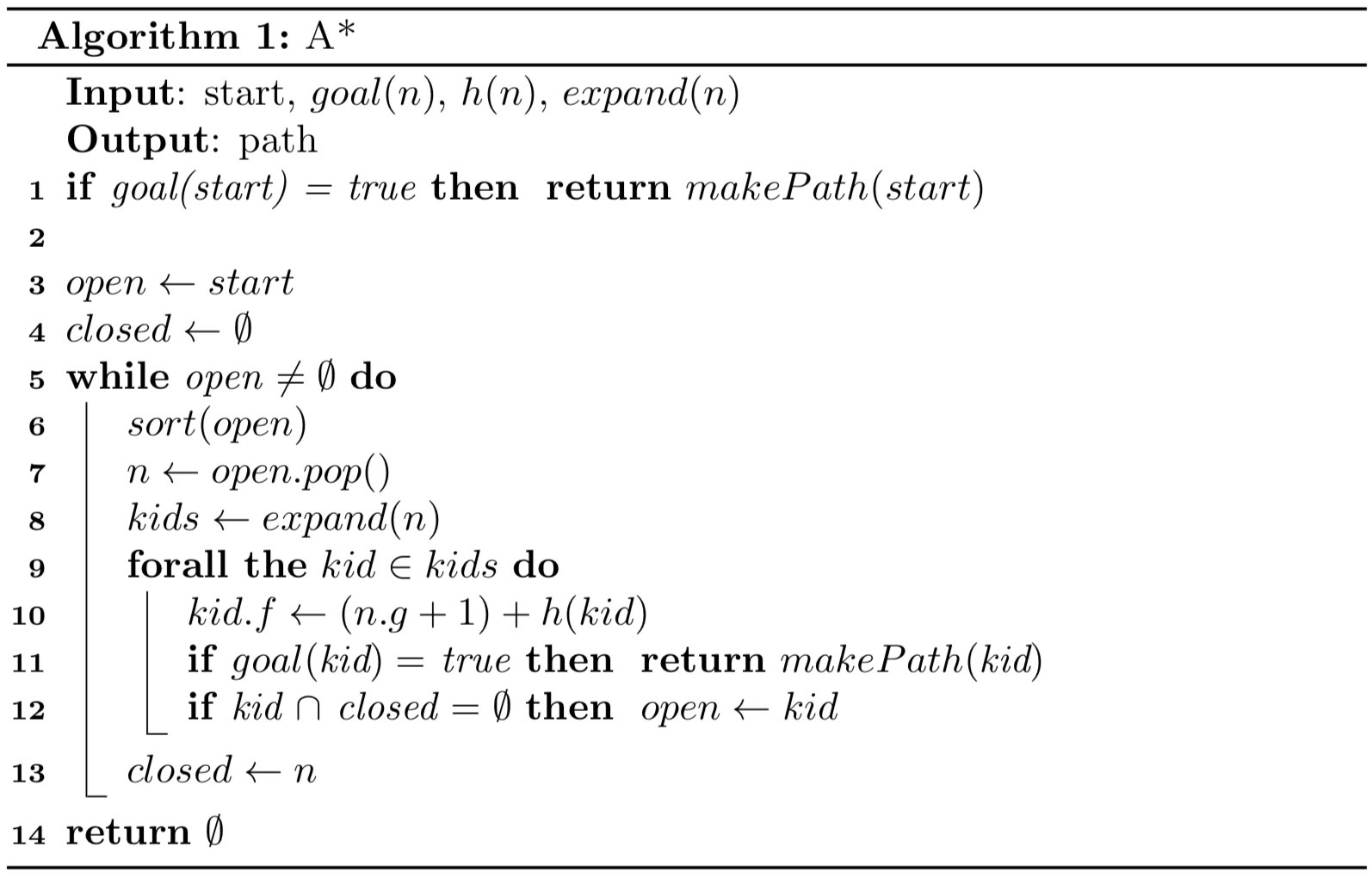

A* search

apart from calculating the distance from a perticular state to our goal state (\(h(x)\) )in order to find our best path, we can also add another layer of assurity by calculating the distance from our start state to that perticular state(\(e(x)\) ). which ensure that we not only find the best path relative to our goal node but also to our start node which inturn helps us to find the shortest path from our start state to our goal state. and this is exactly the technique that we use to solve our problem!(fig-1.6).

[f(x) = h(x) + e(x)]

fig 1.9 : Weight map of A* Search : Question: why most of the values are the same?? (Hint: see the formula!!)

fig 1.10 : using A* search on our problem

although there are several other algorithms for finding the shortest paths (like dijkstra,prims etc) but the reason why we choose A* star above all other is because of its speed which is the reason why it is been used heavily in the games industry espicially in creating enemy A.I which can track you down without bumping into the walls… so every time you get trapped by enemies you can curse astar for that ;) .

so thats all folks! we have covered a lot of ground today, we went from knowing nothing about A.I to creating our own self driving car, now i dare you to create something cool with it… like a self driving helicopter, or a zombie game inwhich you act as a destination for zombie so that they chaze you in your map….so go ahead and create some thing cool with these techniques, and don’t forget to share the fun with us!

And if you sill want to learn more and try some fun interactive demos on Classical A.I algorithms I recommend to try these:-

Basically I plagerize this book xD: Artificial Intelligence: A Modern Approach Book a.k.a bible of A.I

Source code of all the algorithms and more:https://github.com/aimacode/aima-javascript

Another Great collection of article coverning ai used in games: https://github.com/libgdx/gdx-ai

Fun:- Great Playground:- https://qiao.github.io/PathFinding.js/visual/